Continuous Mass Function is Population Probability

Discrete Random Variable and Mathematical Expectation-II

As already we now familiar with the discrete random variable, it is the random variable which takes countable number of possible values in a sequence. The two important concept related to the discrete random variables are the probability of discrete random variable and distribution function we restrict the name to such probability and distribution function as,

Probability Mass function (p.m.f)

The Probability Mass function is the probability of the discrete random variable, so for any discrete random variables x1, x2, x3, x4,……, xk the corresponding probabilities P(x1), P(x2), P(x3), P(x4)……, P(xk) are the corresponding probability mass functions.

Specifically, for X=a, P(a)=P(X=a) is its p.m.f

We here onwards use probability mass function for discrete random variables probability. All the probability characteristics for the probability will obviously applicable to probability mass function like positivity and summation of all p.m.f will be one e.t.c.

Cumulative Distribution Function (c.d.f)/Distribution Function

The distribution function defined as

F(x)=P(X<=x)

for discrete random variable with probability mass function is the cumulative distribution function (c.d.f.) of the random variable.

and mathematical expectation for such random variable we defined was

we now see some of the results of mathematical expectations

- If x1, x2, x3, x4,….. are the discrete random variables with respective probabilities P(x1), P(x2), P(x3), P(x4) … the expectation for the real valued function g will be

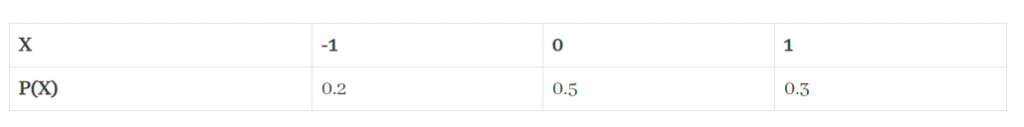

Example: for the following probability mass functions find the E(X3)

Here the g(X)=X3

So,

E (X 3) = (-1)3 <em>0.2 + (0)3</em> 0.5 + (1)3 * 0.3

E (X3) = 0.1

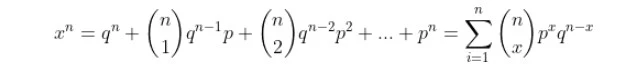

In the similar way for any nth order we can write

Which is known as nth moment.

2. If a and b are constants then

E[aX + b]=aE[X] + b

This we can understand easily as

=aE[X] + b

Variance in terms of Expectation.

For the mean denoted by μ the variance of the discrete random variable X denoted by var(X) or σ in terms of expectation will be

Var(X) =E[(X- μ)2]

and this we can further simplify as

Var(X) =E[(X- μ)2]

= E [X2] – 2μ2 + μ2

= E [X2] – μ2

this means we can write the variance as the difference of the expectation of random variable square and square of expectation of random variable.

i.e. Var (X)= E[X2] – (E[X])2

Example: when a die is thrown calculate the variance.

Solution: here we know when die thrown the probabilities for each face will be

p(1)=p(2)=p(3)=p(4)=p(5)=p(6)=1/6

hence for calculating variance we will find expectation of random variable and its square as

E[X]=1.(1/6)+2.(1/6)+3.(1/6)+4.(1/6)+5.(1/6)+6.(1/6)=(7/2)

E[X2] =12.(1/6)+22.(1/6)+32.(1/6)+42.(1/6)+52.(1/6)+62.(1/6) =(1/6)(91)

and we just obtained the variance as

Var (X) =E[X2] – (E[X])2

so

Var (X)=(91/6) -(7/2)2 =35/12

One of the important identity for variance is

- For the arbitrary constants a and b we have

Var(aX + b) =a2 Var(X)

This we can show easily as

Var(aX + b) =E[(aX+ b -aμ-b)2 ]

=E[a2(X – μ)2]

=a2 E[(X-μ)2]

=a2 Var(X)

Bernoulli Random variable

A Swiss mathematician James Bernoulli define the Bernoulli random variable as a random variable having either success or failure as only two outcomes for the random experiment.

i.e When the outcome is success X=1

When the outcome is failure X=0

So the probability mass function for the Bernoulli random variable is

p(0) = P{X=0}=1-p

p(1) =P{X=1}=p

where p is the probability of success and 1-p will be the probability of failure.

Here we can take 1-p=q also where q is the probability of failure.

As this type of random variable is obviously discrete so this is one of discrete random variable.

Example: Tossing a coin.

Binomial Random Variable

If for a random experiment which is having only outcome as success or failure we take n trials so each time we will get either success or failure then the random variable X representing outcome for such n trial random experiment is known as Binomial random variable.

In other words if p is the probability mass function for the success in the single Bernoulli trial and q=1-p is the probability for the failure then the probability for happening of event 'x or i' times in n trials will be

or

Example: If we toss two coins six times and getting head is success and remaining occurrences are failures then its probability will be

in the similar way we can calculate for any such experiment.

The Binomial random variable is having the name Binomial because it represent the expansion of

If we put in place of n=1 then this would turn into the Bernoulli's random variable.

Example: If five coins were tossed and the outcome is taken independently then what would be the probability for number of heads occurred.

Here if we take random variable X as the number of heads then it would turns to the binomial random variable with n=5 and probability of success as ½

So by following the probability mass function for the binomial random variable we will get

Example:

In a certain company the probability of defective is 0.01 from the production. The company manufacture and sells the product in a pack of 10 and to its customers offer money back guarantee that at most 1 of the 10 product is defective, so what proportion of sold products pack the company must replace.

Here If X is the random variable representing the defective products then it is of the binomial type with n=10 and p=0.01 then the probability that the pack will return is

Example: (chuck-a-luck/ wheel of fortune) In a specific game of fortune in hotel a player bet on any of the numbers from 1 to 6, three dice then rolled and if the number appears bet by the player once, twice or thrice the player that much units means if appear once then 1 unit if on two dice then 2 units and if on three dice then 3 units, check with the help of probability the game is fair for the player or not.

If we assume there will be no unfair means with the dice and con techniques then by assuming the outcome of the dice independently the probability of success for each dice is 1/6 and failure will be

1-1/6 so this turns to be the example of binomial random variable with n=3

so first we will calculate the winning probabilities by assigning x as players win

Now to calculate the game is fair for the player or not we will calculate the expectation of the random variable

E[X] = -125+75+30+3/216

= -17/216

This means the probability of losing the game for the player when he plays 216 times is 17.

Conclusion:

In this article we discussed some of the basic properties of a discrete random variable, probability mass function and variance. In addition we has seen some types of a discrete random variable, Before we start the continuous random variable we try to cover all the types and properties of discrete random variable, if you want further reading then go through:

Schaum's Outlines of Probability and Statistics

https://en.wikipedia.org/wiki/Probability

For more Topics on Mathematics, please follow this link

Source: https://lambdageeks.com/probability-mass-function/